Building Secure LLM Apps into Your Business

Should you be granting LLMs keys to the kingdom?

Join us to learn about the risks of creating LLM applications that act as autonomous agents, and what can be done to mitigate these risks.

LLM-powered apps are at the forefront of gaining business’ productivity and efficiency benefits. Most businesses are now crafting bespoke LLM-powered solutions.

However, with great power comes great responsibility. You need to be aware of the critical security issues associated with LLM applications, especially when they are granted access to tools and plugins to act as autonomous agents.

Gain practical understanding of the vulnerabilities of LLM agents and learn about essential tools and techniques to secure your LLM-based apps.

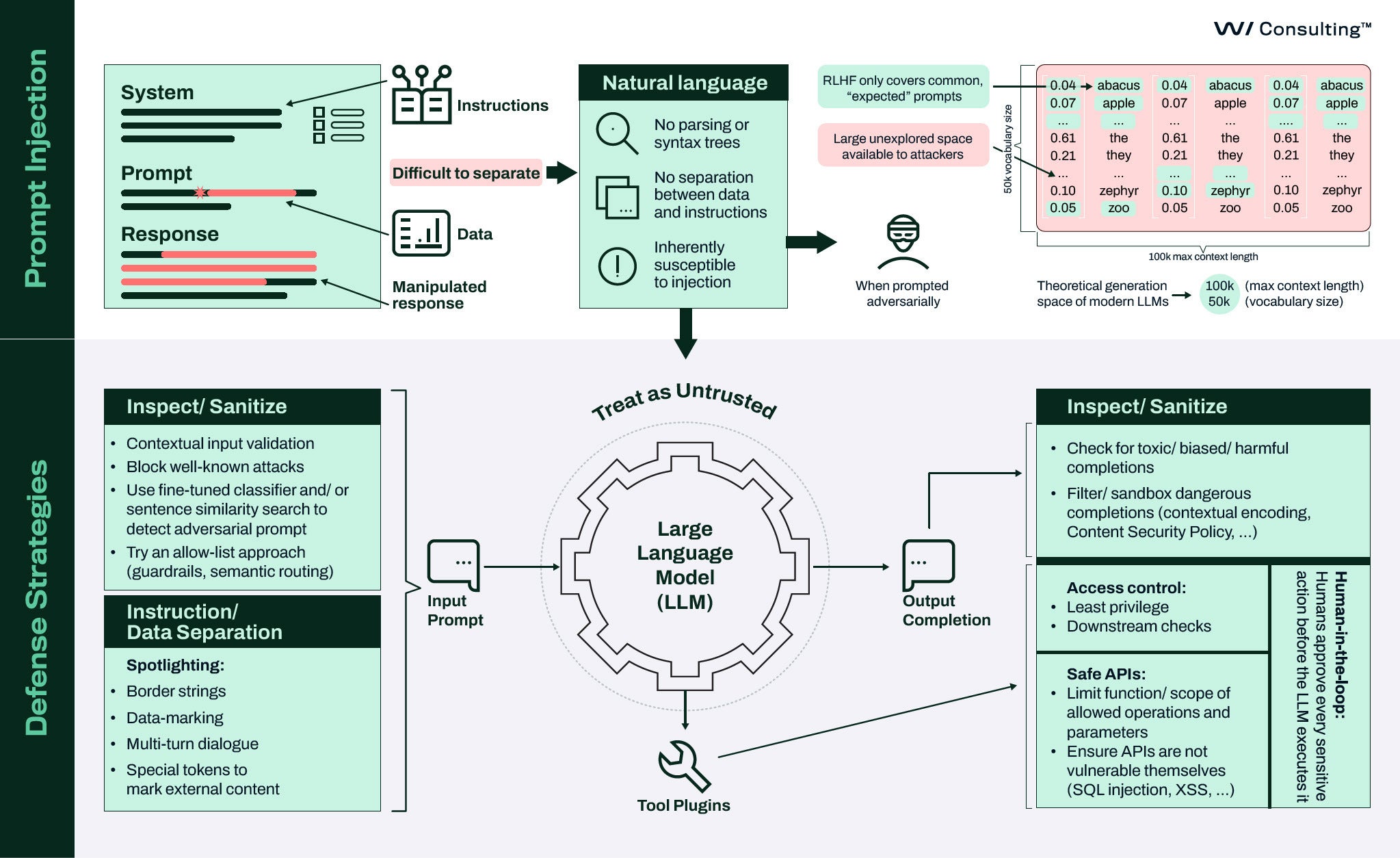

Our specialist will provide an eye-opening demo on the impact of prompt injection on LLM-powered agents that lead to unintended and malicious outcomes. We’ll cover what prompt injection is and how LLM agents are inherently vulnerable to such attacks. We’ll also cover the current mitigation strategies to ensure secure deployment of agents in your organization.

This session is a must-attend for any company eager to leverage the potential of LLMs while maintaining a robust security posture.

Our speakers

Donato Capitella

Principal Security Consultant, WithSecure

With over a decade of experience in cybersecurity, Donato has led penetration testing for web applications and networks, spearheaded goal-driven adversary simulation, and led purple team activities. His current research interest is the security of autonomous agents created using Large Language Models.

Janne Kauhanen

Cyber Host & Account Director, WithSecure

For the last decade as a cyber translator Janne has been helping WithSecure consulting clients find solutions for their information security issues.

Related Content

Generative AI Security

Are you planning or developing GenAI-powered solutions, or already deploying these integrations or custom solutions?

We can help you identify and address potential cyber risks every step of the way.

Read more

Should you let ChatGPT control your browser?

This blog post explores the security implications of enabling Large Language Models (LLMs) to operate web browsers, highlighting the dangers of prompt injection vulnerabilities. It presents two case studies using Taxy AI, a prototype browser agent, to show how attackers can exploit these vulnerabilities to extract sensitive data from a user's email and manipulate the agent into merging a harmful pull request on GitHub.

Read moreA Case Study in Prompt Injection for ReAct LLM Agents

This blog post explores the security implications of enabling Large Language Models (LLMs) to operate web browsers, highlighting the dangers of prompt injection vulnerabilities. It presents two case studies using Taxy AI, a prototype browser agent, to show how attackers can exploit these vulnerabilities to extract sensitive data from a user's email and manipulate the agent into merging a harmful pull request on GitHub.

Read more